Jeffrey Paul

Apple Has Begun Scanning Your Local Image Files Without Consent

15 January 2023

( 1108 words, approximately 6 minutes reading time. )

Preface: I don’t use iCloud. I don’t use an Apple ID. I don’t use the Mac App Store. I don’t store photos in the macOS “Photos” application, even locally. I never opted in to Apple network services of any kind - I use macOS software on Apple hardware.

Today, I was browsing some local images in a subfolder of my Documents folder, some HEIC files taken with an iPhone and copied to the Mac using the Image Capture program (used for dumping photos from an iOS device attached with an USB cable).

I use a program called Little Snitch which alerts me to network traffic attempted by the programs I use. I have all network access denied for a lot of Apple OS-level apps because I’m not interested in transmitting any of my data whatsoever to Apple over the network - mostly because Apple turns over customer data on over 30,000 customers per year to US federal police without any search warrant per Apple’s own self-published transparency report. I’m good without any of that nonsense, thank you.

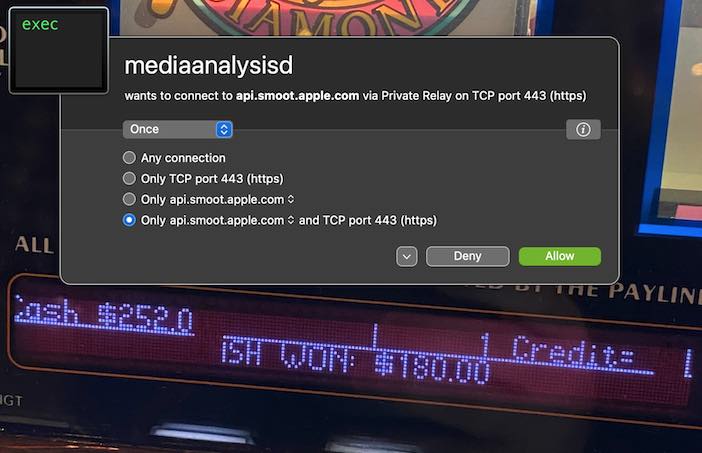

Imagine my surprise when browsing these images in the Finder, Little Snitch told me that macOS is now connecting to Apple APIs via a program named mediaanalysisd (Media Analysis Daemon - a background process for analyzing media files).

It’s very important to contextualize this. In 2021 Apple announced their plan to begin clientside scanning of media files, on device, to detect child pornography (“CSAM”, the term of art used to describe such images), so that devices that end users have paid for can be used to provide police surveillance in direct opposition to the wishes of the owner of the device. CP being, of course, one of the classic Four Horsemen of the Infocalypse trotted out by those engaged in misguided attempts to justify the unjustifiable: violations of our human rights.

Apple has repeatedly declared in their marketing materials that “privacy is a human right”, yet they offered no explanation whatsoever as to why those of us who do not traffic in child pornography might wish to have such privacy-violating software running on our devices. It was widely speculated at the time that they were doing this to get the FBI off their back so that they could roll out clientside end-to-end encryption for iCloud, something they were not at the time doing (which provided and preserved a backdoor in iMessage privacy, specifically for the FBI).

There was a large public backlash. Apple likely expected this.

Some weeks later, in an apparent (but not really) capitulation, Apple published the following statement:

Based on feedback from customers, advocacy groups, researchers and others, we have decided to take additional time over the coming months to collect input and make improvements before releasing these critically important child safety features.

The media erroneously reported this as Apple reversing course.

Read the statement carefully again, and recognize that at no point did Apple say they reversed course or do not intend to proceed with privacy-violating scanning features. As a point of fact, Apple said they still intend to release the features and that they consider them “critically important”.

Apple is very good at writing technically truthful things that say one thing that cause reporters to report a different thing (which is not factual). This becomes an “everybody knows” sort of thing where the narrative that is widely believed and accepted by the public is not what Apple actually said. Apple PR exploits poor reading comprehension ability, while maintaining some sort of imagined moral integrity because they never made any factually false statements during their attempts to explicitly confuse and induce misreporting.

To recap:

-

In 2021, Apple said they’d scan your local files using your own hardware, in service of the police.

-

People got upset, because this is a clear privacy violation and is wholly unjustifiable on any basis whatsoever. (Some people speculated that such a move by Apple was to appease the US federal police in advance of their shipping better encryption features which would otherwise hinder police.)

-

Apple said some additional things that did NOT include “we will not scan your local files”, but did include a confirmation that they intend to ship such features that they consider “critically important”.

-

The media misreported this amended statement, and people calmed down.

-

In late 2022, Apple shipped end-to-end encrypted options for iCloud.

-

Today, Apple scanned my local files and those scanning programs attempted to talk to Apple APIs, even though I don’t use iCloud, Apple Photos, or an Apple ID. This would have happened without my knoweldge or consent if I were not running third-party network monitoring software.

Who knows what types of media governments will legally require Apple to scan for in the future? Today it’s CP, tomorrow it’s cartoons of the prophet (PBUH please don’t decapitate me). One thing you can be sure of is that this database of images for which your hardware will now be used to scan will regularly be amended and updated by people who are not you and are not accountable to you.

This is your first and only warning: Stock macOS now invades your privacy via the Internet when browing local files, taking actions that no reasonable person would expect to touch the network, with iCloud and all analytics turned off, no Apple apps launched (this happened in the Finder, via spacebar preview), and no Apple ID input. You have been notified of this new reality. You will receive no further warnings on the topic.

Integrate this data and remember it: macOS now contains network-based spyware even with all Apple services disabled. It cannot be disabled via controls within the OS: you must used third party network filtering software (or external devices) to prevent it.

This was observed on the current version of macOS, macOS Ventura 13.1.

Aside: I can’t recommend Little Snitch enough. It’s literally the first software I install (via USB) on a fresh macOS, before I even enable Wi-Fi or plug in a network cable.

A final reminder: if you’ve nothing to hide and you’ve done nothing wrong, those are the times when it is most important to limit information transfer to law enforcement. Law enforcement obtaining data on criminals is not a tragedy. Law enforcement investigating innocent people leads to extreme injustice. You should reject all law enforcement surveillance attempts, obviously if you are criminal, but especially if you are an innocent.

As usual, you can comment on and discuss this post on the BBS.

About The Author

Jeffrey Paul is a hacker and security researcher living in Berlin and the founder of EEQJ, a consulting and research organization.